Data centers are the backbone of today’s digital world, providing the infrastructure needed for cloud computing, artificial intelligence (AI), big data analytics, and countless other applications that power everything from online services to enterprise systems. As demand for data processing and storage continues to grow, so does the need for more advanced, efficient, and powerful chips. Over the past few years, data center chip technology has undergone rapid advancements, with two critical areas of focus emerging: AI integration and energy efficiency.

In this article, we delve into the latest innovations in data center chip technology, exploring how AI integration and energy efficiency are reshaping the landscape of data processing and operational management.

The Rise of AI Integration in Data Center Chips

As artificial intelligence continues to permeate industries from healthcare and finance to retail and logistics, data centers must evolve to meet the unique demands of AI workloads. Traditional general-purpose chips (such as CPUs) are ill-suited for the intense parallel processing requirements that AI algorithms, particularly deep learning and machine learning models, place on hardware. This has spurred the development and adoption of AI-optimized chips designed to accelerate AI processes and reduce latency.

-

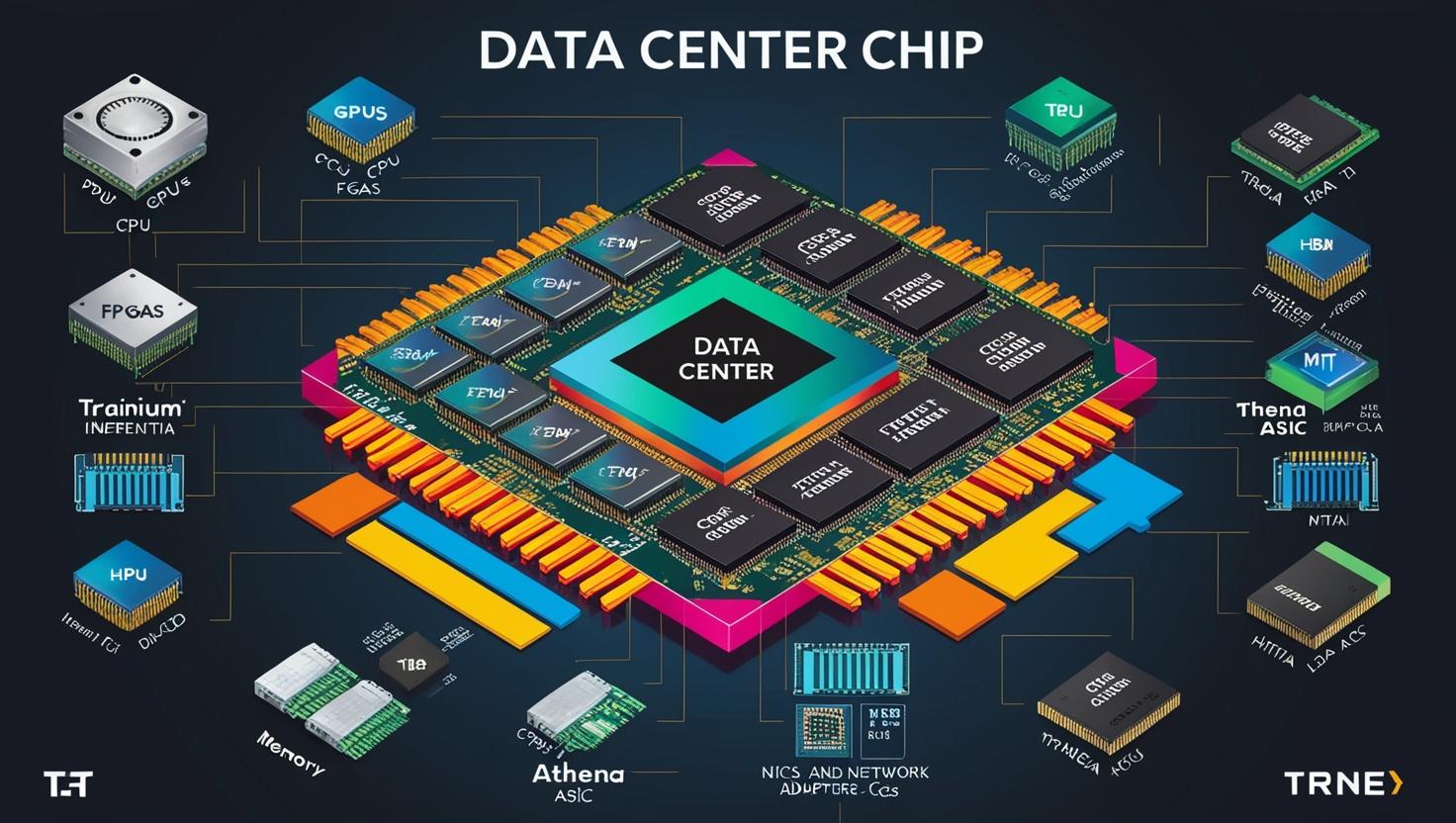

Specialized AI Chips: GPUs, TPUs, and FPGAs

A key advancement in data center chip technology is the rise of specialized processors designed to handle AI tasks more efficiently than traditional CPUs. The most prominent of these are:

-

Graphics Processing Units (GPUs): Originally designed for rendering images in video games, GPUs have evolved into powerful parallel processors capable of handling the large-scale data computations required for AI tasks. Companies like NVIDIA have pioneered the use of GPUs in AI and deep learning, and they are now widely used in data centers for everything from training AI models to running inference tasks.

-

Tensor Processing Units (TPUs): Developed by Google, TPUs are application-specific integrated circuits (ASICs) that are custom-designed to accelerate the performance of machine learning models, particularly in the realm of deep learning. TPUs are highly optimized for matrix multiplication operations, a core component of many AI models, making them significantly more efficient than GPUs for certain AI tasks.

-

Field-Programmable Gate Arrays (FPGAs): FPGAs are programmable hardware that can be customized for specific workloads. These chips provide flexibility, allowing data centers to optimize hardware for particular AI tasks, such as real-time video processing, data streaming, and advanced analytics.

-

AI-Driven Data Processing in Real-Time

The integration of AI within data centers is also enabling real-time data processing on a massive scale. As organizations increasingly rely on real-time insights to drive decisions, AI-powered chips help enable faster processing and analysis of data streams from various sources. Whether it’s analyzing social media feeds, processing video content, or enabling predictive maintenance in industrial settings, AI-optimized chips can process vast amounts of data much quicker than traditional processors.

For instance, data centers leveraging AI-integrated chips can immediately identify trends in large datasets and make predictions or recommendations in near real-time. This is especially beneficial for industries like e-commerce, where customer behavior analytics are used for personalized recommendations, or financial services, where real-time market data can inform trading decisions.

The global data center chip industry is expected to grow from USD 206.96 billion in 2025 to USD 390.65 billion by 2030, growing at a CAGR of 13.5% from 2025 to 2030.

Download PDF Brochure @

https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=39999570

The Push for Energy Efficiency in Data Center Chips

As data centers continue to grow and handle ever-increasing volumes of data, the energy consumption of these facilities has become a pressing concern. Data centers consume vast amounts of electricity for both computing and cooling, making energy efficiency a top priority in chip development. Innovations in energy-efficient chip technologies are not only helping reduce operational costs but are also contributing to sustainable and eco-friendly data processing.

-

Advancements in Power-Efficient Chip Design

Energy efficiency in chip design is critical, particularly as data centers scale up their operations. Many of the latest advancements in data center chips are focused on optimizing power usage without sacrificing performance. Some of the key innovations include:

-

Low Power Consumption Architectures: Modern chip architectures, including ARM-based chips, focus on reducing the energy needed to perform computations. These chips consume far less power than their traditional x86 counterparts, making them well-suited for high-density environments like data centers where power efficiency is paramount.

-

Silicon Photonics: This emerging technology leverages light (photons) instead of electrical signals to transmit data between chips, significantly reducing power consumption and heat generation. Silicon photonics is especially beneficial in high-bandwidth applications, such as those found in data centers, where the ability to transfer large volumes of data without consuming excess energy is crucial.

-

3D Chip Stacking: Another innovation aimed at improving energy efficiency is 3D chip stacking. This technology involves stacking multiple layers of chips on top of each other to reduce the physical distance data must travel within the chip, lowering power consumption and improving speed. 3D stacking also allows for better thermal management, which further enhances overall energy efficiency.

-

Energy-Efficient Cooling Solutions

As chip designs become more power-efficient, cooling systems in data centers are also evolving. Traditional air cooling systems are often inadequate for the heat generated by high-performance chips. Newer, liquid cooling technologies and immersion cooling methods are being adopted to further reduce energy consumption and ensure chips operate within safe temperature ranges. These cooling systems help minimize the environmental impact of data centers by reducing the amount of energy required to keep them cool.

For example, liquid cooling uses a cooling fluid that directly absorbs heat from the chips, making it more effective than air-based systems at removing heat. Immersion cooling takes this a step further by submerging the chips in non-conductive fluids, enabling even higher levels of energy efficiency and allowing for denser chip configurations within the data center.

-

The Role of AI in Energy Management

AI integration isn’t just limited to data processing; it’s also transforming the way data centers manage their energy usage. By utilizing AI-driven algorithms, data centers can optimize power consumption in real-time by adjusting workloads, cooling systems, and energy distribution. For example, AI can predict peak energy demand periods and dynamically adjust operations to ensure energy is used as efficiently as possible.

Some data centers are also experimenting with renewable energy sources, such as solar or wind power, and using AI to monitor and manage energy usage based on availability. AI-enabled energy management systems can help reduce a data center’s carbon footprint while simultaneously improving overall operational efficiency.

Conclusion

The latest advancements in data center chip technology—particularly in AI integration and energy efficiency—are revolutionizing the way data is processed, stored, and transmitted. AI-optimized chips, such as GPUs, TPUs, and FPGAs, are driving faster, more intelligent data processing, enabling real-time analytics and smarter decision-making across industries. Meanwhile, innovations in power-efficient chip design and cooling systems are helping data centers manage energy consumption more effectively, reducing both operational costs and environmental impact.

As the demand for cloud computing, AI, and big data grows, these innovations will continue to transform the data center landscape, making it more powerful, efficient, and sustainable. The future of data center chip technology looks incredibly promising, and these advancements are laying the foundation for the next generation of digital infrastructure that will drive innovation in every sector.